In January and February 2025, the Health Research Authority asked people across health and social care research about their experience of study set-up at sites in the UK.

The results will help us to create a baseline to measure the impact of the improvements we are making as part of the UK Clinical Research Delivery (UKCRD) programme, our work to develop our digital research systems, and our new strategy, to speed up the study set-up process.

We are working with our partners to make changes across the UK to standardise the study set-up at site processes by making them more efficient, removing known blocks, and by helping sites and sponsors work together in a consistent way.

Our plan is to repeat this survey every year to measure and assess the impact we are making.

Question themes

In the survey we asked a series of questions under the following themes:

- ease – how easy is it to set up studies?

- clarity – how clear are processes when setting up studies?

- consistency – how consistent was your experience across sites and settings?

- expectations – are studies usually set up in the way you expect them to be?

- efficiency – how efficient is the set-up process, is there unnecessary duplication?

- speed – how fast is it to set up studies?

You can take a look at the results for each of these themes by clicking on each heading.

Summary of results

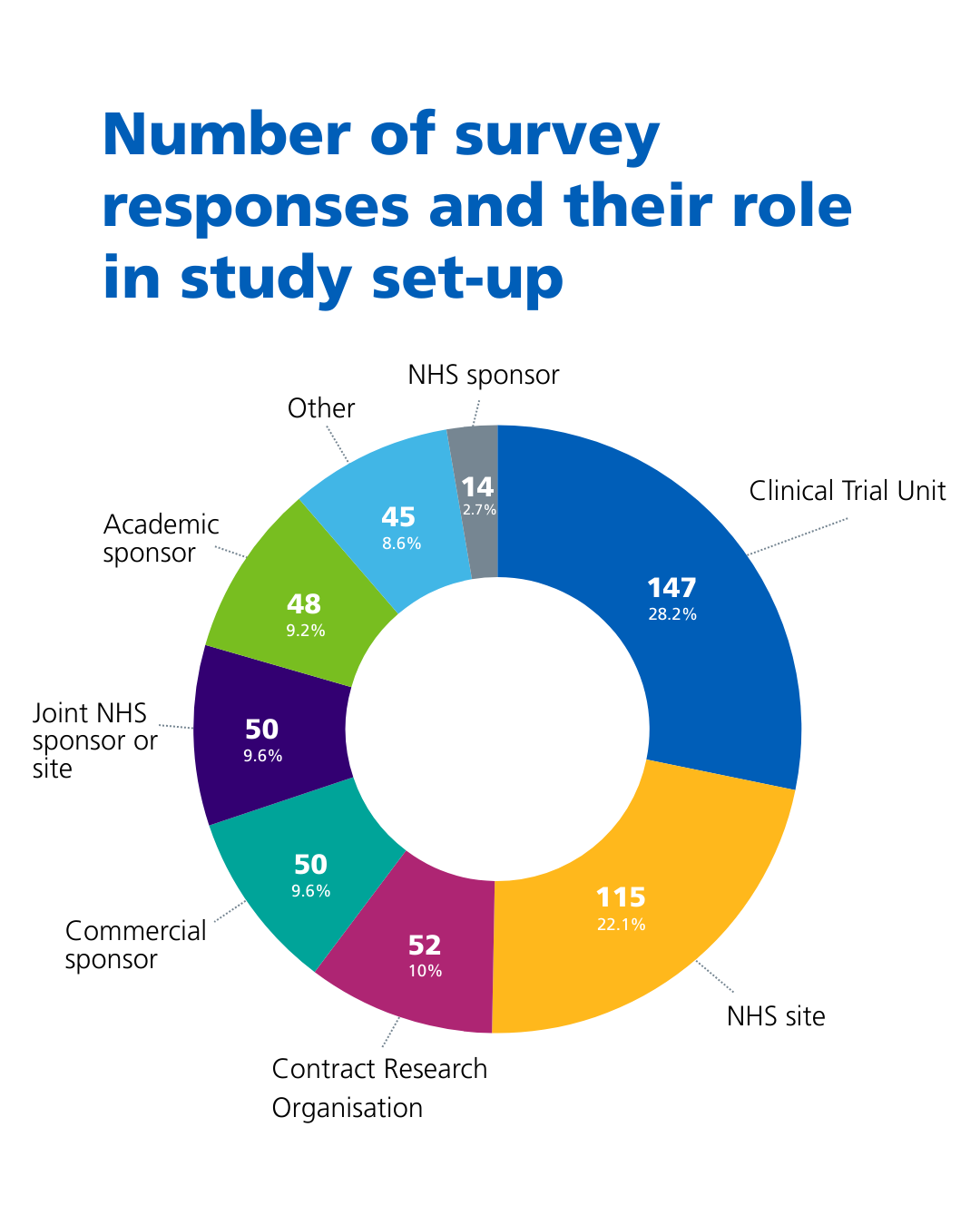

We analysed a total of 521 responses from individuals and organisations working across health and social research in the UK.

We asked respondents what their role was study set-up using the following categories:

- Clinical Trial Unit – 147 responses (28.2%)

- NHS site – 115 responses (22.1%)

- Clinical Research Organisation – 52 responses (10%)

- Commercial sponsor – 50 responses (9.6%)

- Joint NHS sponsor or site – 50 responses (9.6%)

- Academic sponsor – 48 responses (9.2%)

- Other – 45 responses (8.6%)

- NHS sponsor – 14 responses (2.7%)

Those who selected the ‘other’ category told us they were either an individual working in research, (for example a research nurse, chief investigator or public contributor in research) or other non-commercial sponsors, funders or a private research site.

Below we summarise the results for questions under each theme.

Summary of findings

Ease

Just over half (52.5%) of responses said it was easy (rated 6 out of 10 or more) to set up a study at a single NHS site, with an average rating of 5.6 out of 10. There were differences reported by sector with commercial sponsors rating it harder (average rating of 4.5 out of 10) than those from within the NHS (average rating of 7.5 out of 10)

When it came to rating how easy it was to set up a study across multiple NHS sites the rating fell to 28% of overall respondents rating the process as easy (6 out of 10 or more) with an average rating of 4.2 out of 10 and the feedback was more aligned by sector.

For setting up studies across multiple sites that were not NHS organisations (for example in care homes), only 25.7% of respondents said they thought the process was easy (rated 6 out of 10 or more) with an average rating of 4 out of 10.

40% of responses to this question selected ‘not applicable’ indicating that multiple settings outside of the NHS are not widely used.

Take a look at the detailed results for ease.

Clarity

We saw a generally positive reaction to UK guidance on study set-up being clear with 69% of respondents rating the UK guidance as 6 out 10 or more, with an average rating of 6.5 out of 10. However, the feedback was more mixed for individual site processes during set-up, with 46% of respondents feeling processes were clear (rated 6 out of 10 or more) and an average score of 5 out of 10.

There were also strong differences by sector for clarity of individual site processes, with all sectors outside of the NHS rating clarity of site processes at set-up less than 6 out of 10 on average.

Commercial sponsors (3.84 out of 10) and Clinical Trials Units (CTUs) (4.38 out of 10) rated this the lowest. NHS organisations rated clarity of site processes the highest with an average rating of 6.34 followed by Contract Research Organisations (CROs) who gave an average rating of 5.02.

Feedback was generally positive for individual sponsor processes, with 55% of respondents rating the process as 6 out of 10 or more and an average score of 5.7 out of 10. Responses were consistent across sectors.

Take a look at the detailed results for clarity.

Consistency

We received mixed feedback when it came to consistency of the processes to set up research. We asked respondents to think about their experience of study set-up and how consistent they find the process in different sites and settings, and whether sites and sponsors follow a similar process.

69% of respondents felt that regulators were consistent (rated 6 out of 10 or more) during the study set-up process, with an average score of 6.5 out of 10.

Only 34% felt set-up was consistent (rated 6 out of 10 or more) across NHS sites with an average rating for this question of 4.3 out of 10. There were differences in experience by sector with the NHS and CROs reporting a more consistent experience (scoring on average 5.58 and 4.61 out of 10 respectively) and Clinical Trials Units and Commercial Sponsors the least consistent experience (scoring on average 3.70 and 2.82 out of 10 respectively).

Similarly, 34% also felt set-up was consistent (rated 6 out of 10 or more) across non-NHS sites (scoring an average of 4.5 out of 10) but just under half of respondents answered this question as ‘not applicable’ indicating that set up in non-NHS settings is not as common.

CROs and commercial sponsors rated this the highest on average (6.17 and 5.61 out of 10 respectively)

For consistency of experience with sponsors the average score was 4.7 out of 10 and for consistency across the UK the average score was 4.5 out of 10. CROs rated UK consistency the highest on average (5.57 out of 10) followed by NHS Organisations (5.13 out of 10).

Take a look at the detailed results for clarity.

Expectations

When it came to expectations for study set-up at site, we asked respondents to think about how they would expect studies to be set up and whether they are often surprised with requests from sites or sponsors after they have started the process of set up.

57% (rated 6 out of 10 or more) of respondents said that studies were set up in a way they expected, with an average score of 5.7 out of 10. There was variance in the answers to this question by sector with the NHS and CROs rating this more highly (scoring 6.34 and 5.02 out of 10 on average respectively) than academic sponsors (4.72 out of 10), CTUs (4.38 out of 10) and commercial sponsors (3.84 out of 10).

Take a look at the detailed results for expectations.

Efficiency

For efficiency of site set-up we asked two questions. The first focused on how efficient respondents considered the study set-up process is as a whole, with only 32% rating this 6 out of 10 or more, with an average score of 4.3 out of 10.

We then asked how respondents would rate the number of unnecessary steps or duplication in the study set-up process.

59% of respondents said they thought there was duplication or unnecessary steps in the process (rating 5 or less out of 10) giving an average rating of 5 out of 10.

Commercial and academic sponsors, CTUs and those categorised as ‘other’ felt there are more unnecessary steps than the NHS or CROs, all rating duplication between 4 and 5 out of 10. NHS organisations and CRO rated this 5.59 and 5.45 on average respectively.

Take a look at the detailed results for efficiency.

Speed

We saw clear feedback when we asked how respondents would rate the speed at which studies are set up at site in the UK.

76% of respondents rated the speed of site set-up as 5 out of 10 or lower, with an average score of 3.8 out of 10. The NHS and CROs gave a higher rating for speed than other sectors but all sectors rated it to be under 5 out of 10 on average.

Take a look at the detailed results for speed.

Summary analysis of open comments

This survey was not designed to ask for feedback on specific areas for improvement or new interventions for study set-up as we have existing mechanisms for identifying these. However, an open comments box was provided in the survey for respondents to provide their feedback, which was helpful to add to our existing intelligence.

All comments received aligned to our key improvement themes of ease, clarity, consistency, efficiency and speed. They also provided helpful insight into current challenges.

We have fed these insights into our existing workstreams (for example duplication of technical assurances and the interpretation of the IR(ME)R regulations for radiation or National Contract Value Review) and will continue to do so.

Conclusions

Overall, the responses we received in the survey highlight the need for significant improvement in the efficiency of study set-up at site.

There is cross-sector acknowledgement of unnecessary duplication in the process. The feedback shows we need to improve efficiency and make the process easier, to ensure a more consistent experience and match expectations from people working across research in how the study set-up process should happen.

Feedback was clear across all sectors that they consider the process to be too slow.

As expected, it is generally found to be easier to set up in single NHS sites whereas it becomes harder and less consistent across multiple NHS organisations and settings. Standardisation and streamlining set-up across organisations is an important area for improvement. Respondents were less experienced in the set up of health studies in non-NHS settings but those who did, rated it similarly to their ratings for set up in NHS sites.

Importantly, each sector rated their own processes more favourably than those from a different sector. Although guidance was perceived to be relatively clear this disconnect suggests there is more that can be done to improve alignment and shared understanding of the specific needs and processes between sectors.

The NHS (including sites and sponsors) generally answered the questions more favourably than commercial or academic sponsors which is important for us to understand when designing interventions that aim to address both customer expectations and site set-up in practice.

In conclusion this baseline survey highlights a health and social care research system in need of greater alignment and a more simplified process – both of which will help speed up study set-up.

Our ongoing work with partners as part of the UKCRD programme aims to address these issues. The information from this survey will provide a valuable baseline for us to track satisfaction with the changes and improvements made as part of the programme.

Methodology

Our survey was open for four weeks from 31 January 2025 to 28 February 2025.

We promoted the survey through our newsletters, on social media and by inviting stakeholders to share feedback. We also asked our partner organisations and sector representatives to complete and share the survey via our study set-up advisory board and HRA research champions meetings.

The survey was not primarily designed to ask for specific details on what is or isn’t working well for study set-up at site, however, there was a free text box as part of the survey where respondents could share comments.

The survey used a likert scale from 0-10 for each question. For analysis purposes a decision was taken that a rating of 6 or over would indicate ‘good’ except for the duplication and speed questions where good (less duplication, slower speed) would be rated 5 or less.

Not every question was answered by every respondent, however, we have included the raw data to accompany the charts for each question so you can see the total number of respondents.

Where we have indicated an ‘average’ score we have used a ‘mean’ average.

Limitations of this survey

The survey only indicates a snapshot in time (the month of February 2025) to provide a baseline and is limited to providing a general rating score for each of the areas we hope to improve.

We found the majority of our respondents via a general broadcast which means answers may be more negative than reflects the community if those who completed the survey did so to share particular issues or problems. To mitigate this, we asked our regular partner organisations and representative bodies to complete the survey, and we will be asking them again for feedback more often to seek advice on whether we are making an improvement. Whilst we had good responses we would have liked more responses from industry.

The likert scale chosen went up to 10 which might elicit more average response ratings than if the scale was out of 5 or 7.

The question which asked respondents to tell us what part of health and social care research they worked in enabled a few people to select multiple non-commercial organisations. This meant that we needed to decide what group they best represented. This question will be changed for the next survey and will also take into account other non-commercial sponsors and funders.

Definitions

What do we mean by study set-up at site?

‘Study set-up at site’ is the term we use to describe the activities that a site needs to put in place to deliver a study and begin recruitment.

This does not include the regulatory approvals process, grant funding or sponsorship.

What do we mean by research sites?

Research sites are the organisations with day-to-day responsibility for the locations where a research project is carried out.

These organisations may have various roles in any given study and can exist in several settings, for example in hospital, community-based, primary care or residential settings.